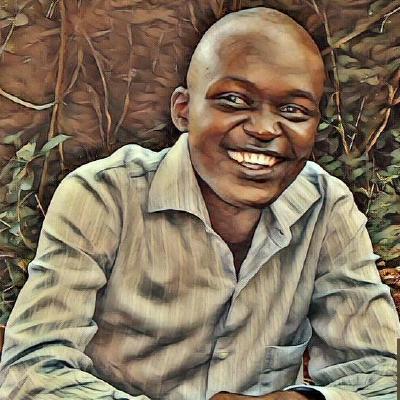

One of the active members of the Fast.ai community Nirant Kasliwal made an offer to answer any NLP and data science questions all through January for free. Having had lots of questions on fast.ai, deep learning and NLP myself, I scheduled for a 30 minute call on 21st January 2019. I sent the questions in advance and got some of them answered during the call. These are my notes from the call and not verbatim answers.

How did you choose NLP over other ML areas

There has been great interest in Deep learning especially in the area of computer vision(CV). As such a great number of talented people have put their efforts in this area. The same is not currently true for NLP. This has led to at least two things.

First, it is not easy to do a lot of groundbreaking stuff on CV and gain visibility as one would gain in other domains of AI/ML. Secondly it means that there’s quite a lot of opportunities to work on areas in NLP that no one is doing and have impact.

How do you do your research

One is working backwards and peeling off the layers. When solving tasks, seek to find answers regarding problems you face in the process. For example, faced with a problem having a small dataset, one thing to research on would be How do we train a model when we have small datasets

As more challenges come up, research on those areas that interest you and that you face problems with.

Reading ML papers- Sources of papers and how to read

ML research is mainly in papers presented at conferences or in journals. A good source of papers is to read the papers from conferences such as ICML et cetera. Also setting setting automated alerts(e.g Google alerts) for topics or areas you are interested in for notifications when new papers are published in those areas.

He used to read about 30-50 papers a month in the past but beginning this year he has shifted to the engineering and tooling side of ML e.g deployments, scalability etc

In reading papers, the following is suggested. Read the abstract and conclusion first. The purpose is to gain an understanding of the core ideas presented in the paper and to gauge if the paper is of interest or relevant to what you are researching on. Read methodology last and initially don’t focus on the math but the core ideas. Read related papers to get a more in-depth understanding of the topic.

As regards the merit of reading so many papers, the thing is that it pays off in many ways. Many papers may not provide groundbreaking ideas but the chances are that you will get one good paper that will present even one idea that will provide a good ROI(Return on Investment). The challenge is that you cannot just randomly pick one paper with the hope of having a great idea pop up from it. By reading several papers, you increase the chances of stumbling upon that great idea.

Deployment options for ML models

Generally deploys models using Flask, a Python micro-framework. The models are exposed through RESTful APIs.

What are some of the most enlightening papers you have read

Michael Nielsen is one of the polymaths of our time and he writes on lots of topics including science and philosophy. Most of what he writes gives new perspectives, clarity and helps in the generation of new ideas. It is worth looking at whatever writing he does.

In the time Niral was moving into ML, there was no fast.ai. He learnt the fundamentals of ML through reading documentation especially of scikit-learn to the extent that he has known it by heart. Several MOOCs also helped with the learning. He did the Analytics Edge from MIT in 2013, which uses R. He also did read a few 100 blogs on solving ML problems between 2014 and 2018.

He also maintains a list of resources at the repo Awesome NLP

Conclusion and Thoughts

Machine Learning has the capacity to help us solve problems in various domains that would be difficult or impossible. Through the internet there is a great opportunity to learn the cutting edge work being done in academia and industry at almost no cost save for the time investment.

A great place to start is perhaps to do MOOCs or online courses like fast.ai and read blog posts on areas of interest. A lot of interesting ideas and cutting edge stuff are found in coference papers, read the most interesting ones and get the core ideas. Take notes, try to replicate the results. Learn by doing and also remember to share your learnings.

Happy Learning

PS Thanks a lot to Nirant for the opportunity he has provided to many people by offering his expertise for free to those who would want to learn ML and more specifically NLP.